Learn how to import the Bill of Materials into Isograph's Reliability Workbench

Blog

Optimizing Condition Monitoring by Bill Keeter

Isograph's software for condition monitoring offers comprehensive solutions tailored to diverse industries. By leveraging advanced algorithms and predictive analytics, it enables proactive maintenance strategies to optimize asset performance. With its intuitive interface and customizable features, Isograph's software empowers users to effectively monitor equipment health and minimize downtime.

Isograph’s Fault Tree+ software includes Markov

Markov, named after Russian mathematician Andrey Markov, refers to a mathematical model that analyzes sequences of events based on the assumption that the probability of the next event depends only on the current state. This concept finds extensive applications across various fields due to its predictive capabilities and versatility.

Markov models are widely employed to understand and predict complex systems with dynamic states, making them invaluable in fields like finance, biology, and engineering. By modeling transitions between different states, Markov processes can reveal insights into system behavior over time.

In the realm of reliability engineering, Isograph's Fault Tree+ software tool leverages Markov models to assess and enhance system reliability. Users can construct fault trees, representing potential system failures, and integrate Markov analysis to simulate the dynamic evolution of these faults. This integration allows for a comprehensive evaluation of system reliability, aiding in proactive maintenance and risk mitigation.

Organizations benefit from Isograph's Fault Tree+ software by gaining a deeper understanding of their systems, identifying vulnerabilities, and making informed decisions to enhance overall reliability. The utilization of Markov in Fault Tree+ underscores the tool's effectiveness in providing robust solutions for industries reliant on dependable systems.

Get a FREE demo version of Fault Tree+ (Markov - found in Isograph's Reliability Workbench Suite) at isograph.com then click on FREE Trial

Unveiling the Power of Attack Trees in Cybersecurity and Beyond – Attack Tree

In the dynamic landscape of cybersecurity, the strategic use of Attack Trees has emerged as a powerful weapon to fortify defenses. These visual representations of potential attack paths offer numerous benefits, particularly in industries where security is paramount, such as aerospace and automotive.

Attack Trees provide a systematic way to assess and visualize potential threats, helping organizations understand the vulnerabilities within their systems. In the aerospace and automotive industries, where the consequences of a security breach can be severe, Attack Trees play a crucial role in identifying and mitigating risks. By mapping out potential attack scenarios, stakeholders can proactively implement robust security measures.

One of the key benefits of Attack Trees lies in their ability to simplify complex security assessments. They break down intricate attack scenarios into a hierarchical structure, making it easier for organizations to prioritize and address the most critical threats. This structured approach enhances decision-making, allowing for the efficient allocation of resources to safeguard against the most probable and impactful attacks.

Isograph's Attack Tree software takes these advantages to the next level, offering a comprehensive solution for various industries. The software enables organizations to create, analyze, and manage Attack Trees efficiently. Its user-friendly interface empowers users to assess and enhance their cybersecurity posture with ease.

Furthermore, the benefits of Attack Trees extend beyond immediate threat mitigation. Isograph's software allows organizations to create a repository of attack scenarios and responses, fostering a continuous learning environment. By sharing insights and best practices, industries can collectively strengthen their defenses against evolving cyber threats.

In conclusion, Attack Trees serve as invaluable tools in enhancing cybersecurity, particularly in industries with critical infrastructure like aerospace and automotive. Isograph's Attack Tree software emerges as a vital asset, providing a user-friendly platform to fortify defenses, streamline assessments, and foster collaborative learning. As cyber threats continue to evolve, leveraging Attack Trees becomes not just a strategy but a necessity for safeguarding the integrity of digital landscapes across diverse sectors.

Get a FREE Demo version of Attack Tree at isograph.com and click on 'Free Trial'. #isograph #attacktrees #mitigatingcyberattacks #threatanalysis

Attack Tree

Autonomous vehicles are susceptible to cyberattacks through various pathways, making cybersecurity a critical concern. Isograph's Attack Tree software and methodology can help analyze and mitigate these risks effectively. Here are some common attack vectors on autonomous vehicles:

1. Wireless Communication: Hackers may exploit vulnerabilities in the vehicle's wireless communication systems, such as Wi-Fi or cellular networks, to gain unauthorized access.

2. Infotainment Systems: Infotainment systems are often connected to the vehicle's main network, providing a potential entry point for attackers to compromise other critical vehicle functions.

3. Remote Keyless Entry: Weaknesses in keyless entry systems can enable attackers to remotely unlock the vehicle, providing physical access to onboard systems.

4. Malicious Software: Attackers may inject malware into the vehicle's systems, disrupting operations or gaining control over critical functions.

5. Sensor Spoofing: Manipulating sensor data, such as GPS or lidar, can mislead the vehicle's perception systems, leading to dangerous driving decisions.

6. Supply Chain Attacks: Compromising components or software during the manufacturing or supply chain process can introduce vulnerabilities into the vehicle.

7. OBD-II Port: The On-Board Diagnostics (OBD-II) port provides a direct connection to the vehicle's internal systems, making it a potential entry point for attackers.

8. Over-the-Air Updates: Attackers may target software updates, injecting malicious code during the update process.

9. Human-Machine Interface: Manipulating the vehicle's interface or user inputs can confuse drivers or override safety systems.

10. Ransomware: Cyber criminals may deploy ransomware to lock the vehicle's systems, demanding a ransom for control restoration.

Isograph's Attack Tree software and methodology are valuable for identifying these potential attack paths and assessing their risks. It allows for the creation of detailed attack trees that visualize attack scenarios, their dependencies, and probabilities. This helps in prioritizing security measures and developing strategies to protect autonomous vehicles from cyber threats effectively. Additionally, Attack Trees enable organizations to communicate these risks clearly to stakeholders and regulatory authorities, fostering trust and safety in autonomous driving technology.

Common Mistakes in Reliability-Centered Maintenance

Title: Common Mistakes in Reliability-Centered Maintenance

Reliability-Centered Maintenance (RCM) is a systematic approach to managing the maintenance of assets to ensure their optimal performance, safety, and longevity. However, even with the best intentions, organizations can make common mistakes in their RCM processes that undermine its effectiveness. Three of these mistakes are over-focusing on singular strategies, a lack of training and documentation, and improper data collection.

One prevalent mistake in RCM implementation is an overemphasis on singular strategies, often exemplified by an undue fixation on developing preventive maintenance (PM) schedules. While preventive maintenance is a vital aspect of RCM, concentrating all efforts solely on this aspect can lead to a myopic approach. RCM is a comprehensive methodology that encompasses various strategies, such as predictive maintenance, condition-based monitoring, and run-to-failure, in addition to preventive maintenance. An exclusive focus on PM can lead to inefficiencies, unnecessary costs, and missed opportunities to optimize asset performance. To avoid this mistake, organizations should strive for a balanced approach, considering all suitable maintenance strategies based on asset criticality and operational context.

Another common error in RCM is the neglect of training and documentation. Effective RCM implementation requires a well-trained team with a deep understanding of the methodology. Inadequate training can result in misinterpretations of asset criticality, inappropriate maintenance strategies, and inconsistent decision-making. Furthermore, documentation is essential to maintain a clear record of RCM analyses, decisions, and actions. A lack of documentation can hinder knowledge transfer, making it challenging to sustain RCM practices over time. Organizations should invest in comprehensive training programs for their personnel involved in RCM and ensure that documentation is meticulous and accessible.

Improper data collection represents a critical pitfall in the RCM process. RCM relies heavily on accurate data to make informed decisions regarding asset maintenance. Inaccurate or incomplete data can lead to incorrect assessments of asset criticality and performance, resulting in inappropriate maintenance strategies. Organizations often fall into the trap of relying on outdated or unreliable data sources, undermining the effectiveness of their RCM efforts. To rectify this mistake, organizations should establish robust data collection and management processes, regularly review and update data sources, and invest in advanced technologies like sensors and data analytics to enhance data accuracy.

In conclusion, while Reliability-Centered Maintenance offers numerous benefits in terms of asset reliability and cost-effectiveness, organizations can make common mistakes that hinder its successful implementation. These mistakes include an over-focus on singular strategies, neglecting training and documentation, and improper data collection. Recognizing and addressing these mistakes is essential for organizations seeking to derive the maximum value from their RCM initiatives. A holistic and balanced approach, coupled with adequate training, comprehensive documentation, and accurate data collection, can lead to more successful RCM implementations and improved asset performance.

Why your organzation should consider Isograph’s Attack Tree & Threat Analysis software

Have you tried Isograph's Attack Tree & Threat Analysis software? Here are some reasons why you might want to consider testing it out for your organzation.

Attack Tree and Threat Analysis software are powerful tools that can help organizations identify and mitigate potential security risks. By using these tools, organizations can gain a better understanding of the potential threats they face and develop strategies to protect their assets and data.

The benefits of using Attack Tree and Threat Analysis software are numerous. First and foremost, these tools can help organizations identify potential vulnerabilities in their systems and networks. This allows organizations to take proactive steps to secure their systems and prevent attacks before they occur.

Additionally, Attack Tree and Threat Analysis software can help organizations prioritize their security efforts. By identifying the most likely and most severe threats, organizations can focus their resources on the areas that are most in need of attention. Another benefit of using Attack Tree and Threat Analysis software is that it can help organizations comply with regulatory requirements. Many regulations, such as HIPAA and GDPR, require organizations to perform regular risk assessments and take steps to mitigate potential threats. By using these tools, organizations can ensure that they are meeting these requirements and avoiding potential penalties.

Overall, all organizations, regardless of size or industry, can benefit from using Attack Tree and Threat Analysis software. By identifying potential threats and vulnerabilities, prioritizing security efforts, and ensuring regulatory compliance, these tools can help organizations protect their assets and data and maintain their reputation and customer trust.

Get a FREE demo version of the software at www.isograph.com and click on 'Free Trial'.

Customizing Availability Workbench (AWB) Reports

One of the most important aspects of your reliability or safety studies is the creation of professional standard reports that will enable you to present the results in a clear and understandable form to colleagues, management, customers and regulatory bodies.

How can I use the Report Designer?

The Isograph reliability software products share a common facility to produce reports containing text, graphs or diagrams. Your input data and output results from reliability applications are stored in a database. This information can be examined, filtered, sorted and displayed by the Report Designer. The Report Designer allows you to use reports supplied by Isograph to print or print preview the data. A set of report formats appropriate to the product is supplied with each product.

You can also design your own reports, either from an empty report page or by copying one of the supplied reports and using that as the starting point. Reports may be published or exported to PDF and Word formats.

How do I make a Text Report?

Designing a text report is simplicity itself. The steps required are as follows: Select the paper size and margins. Select a subset of the whole project database information to be included in the report by one of two options.

The first option contains a list of pre-defined selection criteria corresponding to one or more tables in the database. Each criteria will result in a list of available fields. The alternative method is based on using SQL commands to query the database. Each field selected will be represented by a column in the report. Specify which fields are required in the report and the ordering of the columns in which the information will appear. At this point, the user may select print preview and a basic report will be displayed. The design process may continue in print preview mode if required. as page numbers, the current date, sums of field values may be displayed in the header and footer areas using the text boxes combined with report macros. Pictures in all the common formats (gif, jpg, bmp etc) may also be displayed in the footer or header.

Graph reports can contain one or more graph pages each of which in turn can contain one or more graphs. The graphs themselves can display one or more plots. Each graph has axes, labels, legends and titles that are configured using simple dialogs. Some of the main features are described below : 3-dimensional graph type have the added facility of setting the viewing angle by moving slider controls that alter the rotation and elevation angles. Easily select and filter the data to be plotted.

Use the standard report types that are supplied with the software or design your own graph reports by either modifying existing reports or creating completely new ones. The size and position of the graph area within a report page can be set either through a dialog or more intuitively by the use of the mouse. A graph contains a rich array of attributes each of which can be easily set through the use of a tabbed dialog. Plot data as 2-D line, bar, pie, area and scatter graphs or 3-D bar, pie, scatter and tape graphs. Plot log/lin, log/log or lin/log graphs. These graphs can be plotted in various vertical or horizontal styles.

Print them out directly to color or monochrome devices or export them in Rich Text Format (RTF). The RTF file can then be imported into other products such as Microsoft Word where it could be combined into a larger document and then printed. If required additional data such as page numbers, the current date, sums of field values may be displayed in the header and footer areas using the text boxes combined with report macros. Pictures in all the common formats (gif, jpg, bmp etc) may also be displayed in the footer or header.

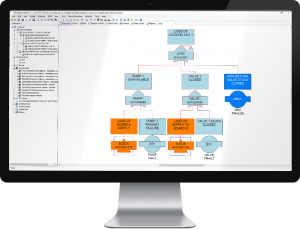

Generating Diagram Reports in Report Designer Many of the Isograph software products use diagrams to represent the system logic. Examples are reliability networks and fault trees. Using the report generator it is possible to include these diagrams in a report. A diagram report may consist of one or more pages, for example, a fault tree diagram may be spread across many pages. Some of the main features are described below: The vertical and horizontal indents in the drawing area may be specified Whether the diagram should maintain its aspect ratio or fit to the drawing area may be specified when used in conjunction with programs such as Reliability Workbench which contain multiple software modules the category of diagram to be displayed may be selected.

As with graph and text reports, diagram reports can be either printed out directly to color or monochrome devices or exported in Rich Text Format (RTF). The RTF file can then be imported into other products such as Microsoft Word where it could be combined into a larger document and then printed.

Availability Workbench – Batch Creating Failure Models

Learn how to create failure models in Isograph's Availability Workbench with Bill Keeter

https://www.youtube.com/channel/UC-zojskxj5yRnQjqNztpdkg

RCMCost – Intro – Part of Availability Workbench Suite

Reliability Centered Maintenance Software

* Predict asset performance

* Optimize your maintenance strategy

* Reduce operational risks

* Improve profitability

* Increase uptime and lower costs

* Optimize spares holdings

* Simulate predictive maintenance

* Meet safety, environmental and operational targets

* Supports standards such as SAE JA1011, MSG-3 and

* MIL-STD-2173(AS)